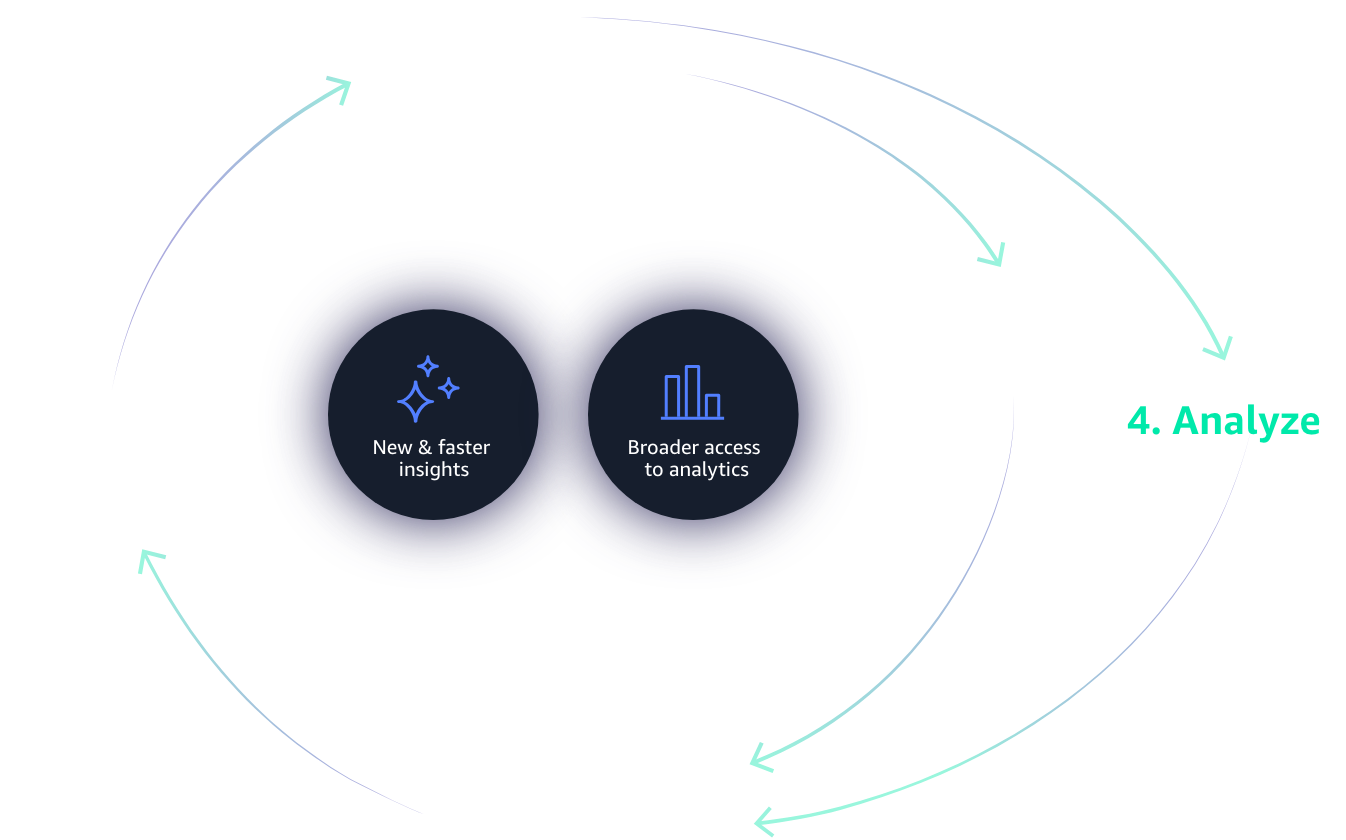

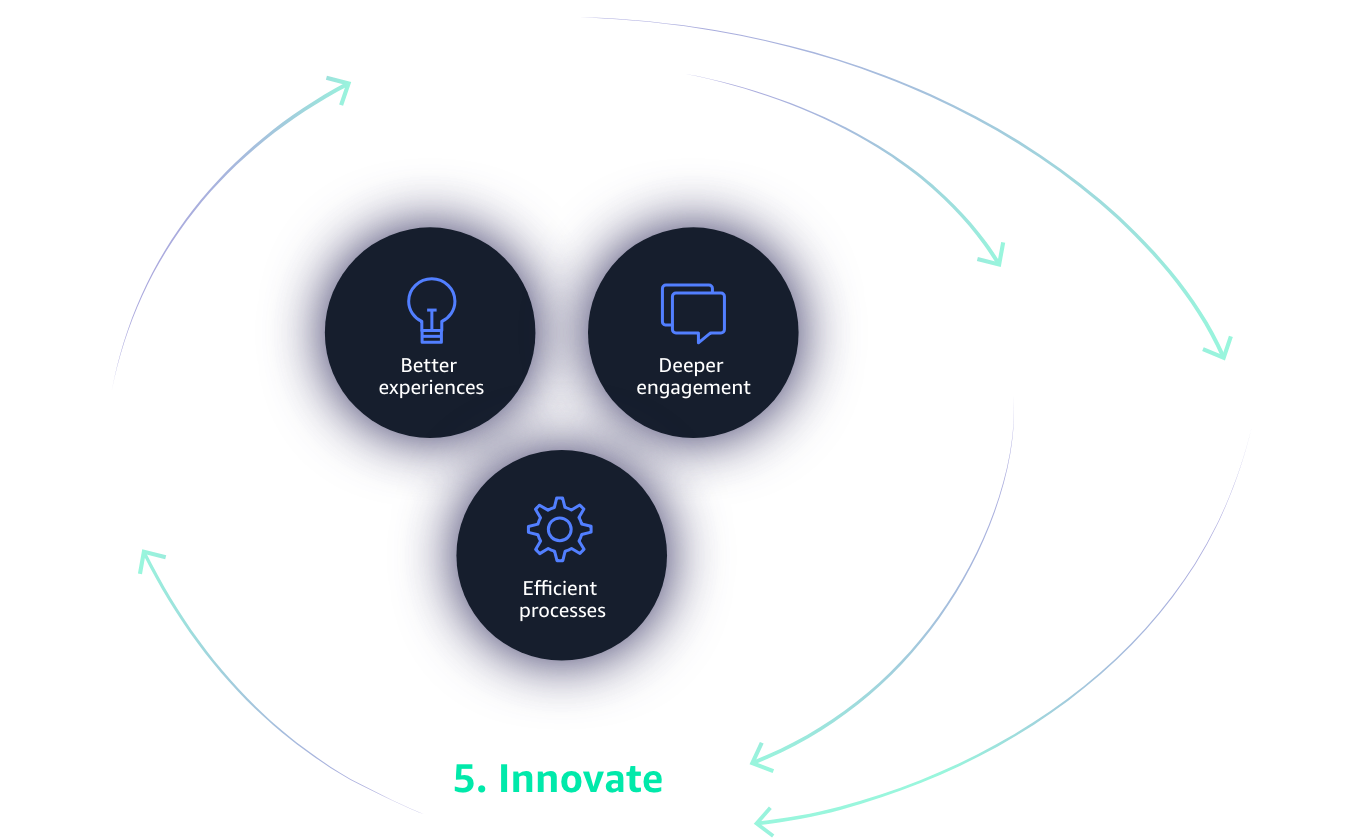

Create perpetual business momentum

According to thermodynamics, perpetual motion is impossible. But who said the laws of physics have to apply to business? When applied properly, data can generate self-sustaining, perpetually accelerating momentum for your organization.

Why a flywheel?

The flywheel – as popularized by author Jim Collins – is a self-reinforcing loop made up of a few key initiatives that feed and are driven by each other that builds a long-term business. In the early 90s, Jeff Bezos incubated his initial idea for Amazon.com using the Amazon flywheel, an economic engine that uses growth and scale to improve the customer experience through greater selection and lower cost.

Learn more about "The Flywheel Effect" from Jim Collins »

Building a successful Data Flywheel

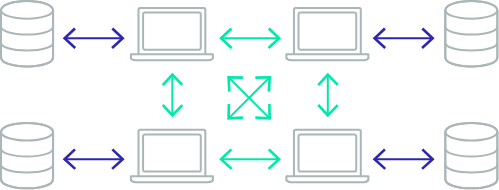

One of the most unique characteristics of the Data Flywheel is that no “one thing” powers it, and organizations that search for such a fundamentally basic solution will likely lose their way. The Data Flywheel moves by many components acting in concert, equating to a whole that’s greater than the sum of its parts. Organizations that take the time to develop each of these components, implementing the most relevant technologies and procedures at every phase, will prosper the most from the Data Flywheel.

Old-guard commercial databases

-

Very Expensive

Legacy databases are expensive and you end up paying more for over-provisioned capacity.

-

Proprietary and lock-in

Proprietary database functions lock-in your applications and limit innovation.

-

Punitive licensing

Complex contractual licensing restrict flexibility and add unpredictable costs.

-

Unexpected audits

Frequent audits can add unexpected and unplanned costs to your deployment.

-

Significant CAPEX investments

On-prem CAPEX never ends—if hardware needs to be upgraded, your budget takes the hit.

Customers are moving to open source databases

Because of the challenges with old-guard commercial databases, customers are moving as fast as they can to open source alternatives like MySQL, PostgreSQL, and MariaDB. However they also want the performance and availability of high-end commercial databases with the simplicity and cost-effectiveness of open source databases.

Get the best of both worlds

Customers migrating their databases to Amazon Aurora get the performance and availability of commercial-grade databases with the simplicity and cost effectiveness of open-source databases.

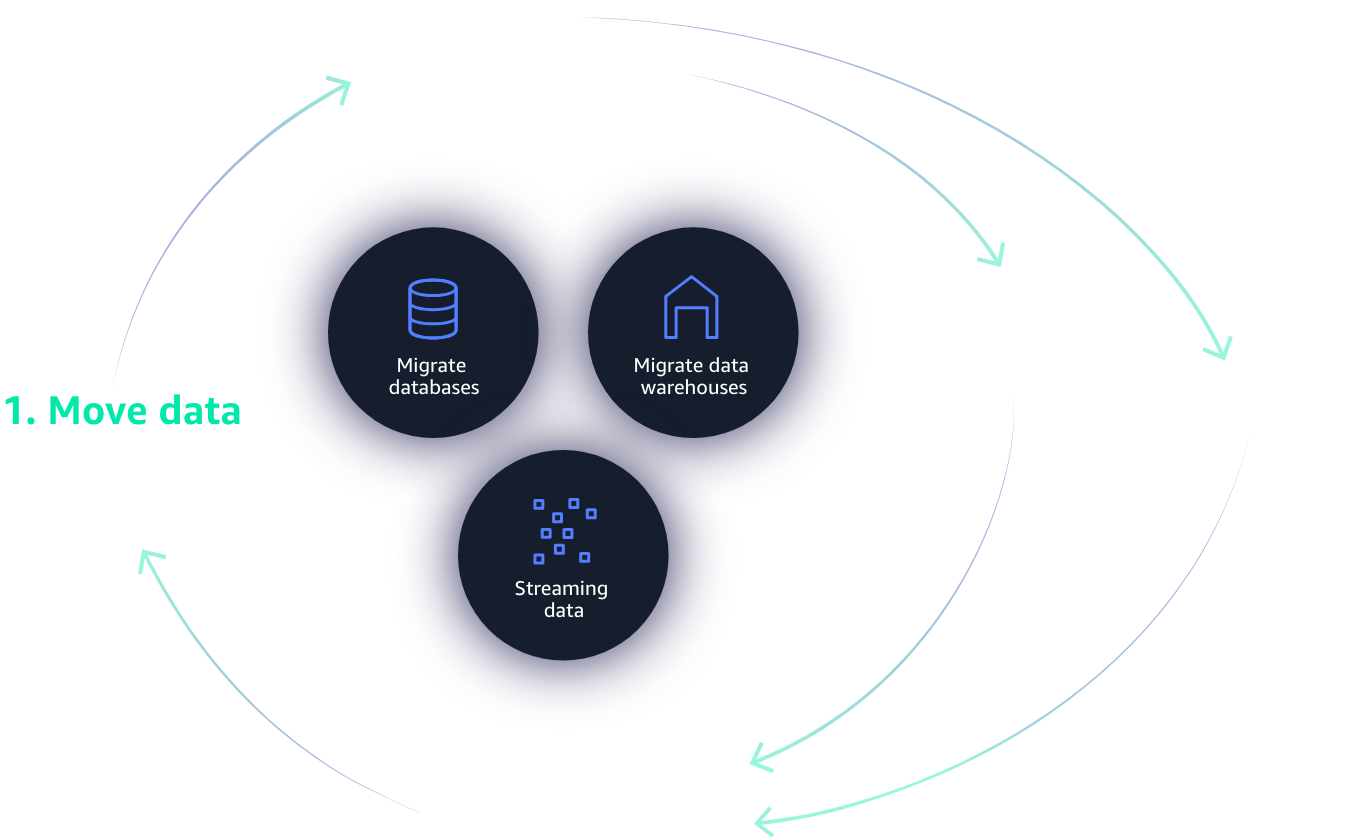

Don’t stop at data, move your workloads too

Data storage, data warehouses, and databases all have a home in the cloud. In addition, you can save time and cost by moving your non-relational databases like MongoDB, your big data processing workloads like Hadoop and Spark, your operational analytics workloads like Elasticsearch, and your real-time analytics workloads like Kafka to the cloud.

Relational database

Migrate from expensive Oracle and SQL Server databases to Amazon Aurora.

Non-relational databases

Move key-value stores to Amazon DynamoDB and document databases like MongoDB to Amazon DocumentDB.

Data warehouses

Migrate from Teradata, Oracle, and SQL Server Data Warehouse to Amazon Redshift.

Hadoop and Spark

Move on-premises Hadoop and Spark deployment to Amazon EMR for time and cost savings.

Operational analytics

Elasticsearch, Logstash, and Kibana (ELK) on-premises can move to Amazon Elasticsearch Service for time and cost savings.

Real-time analytics

Apache Kafka deployments can move to Amazon Managed Streaming for Kafka, and Amazon Kinesis can prepare, load, and analyze data streams into data stores and analytics tools for immediate use.

Explore stories of customers that have moved data and workloads to the cloud

Equinox gets its customer experience in shape

Through the cloud, Equinox Fitness Clubs was able to analyze clickstream and equipment usage data to provide a more engaging customer experience.

Verizon improved performance by 40%

By moving to Amazon Aurora, Verizon improved delivery of innovative applications and services to customers.

Why fully managed databases?

Database management can become a major burden to your business. In addition to hardware and software installation, you have to worry about database configuration, patching, and backups. Complicated cluster configuration for data replication and high availability, and tedious capacity planning, and scaling clusters for compute and storage.

Learn more at the AWS Database, Analytics & Management On Demand

Fully managed databases boost productivity and efficiency

-

Lower costs

Fully managed cloud database management costs substantially less than in-house.

-

Better availability

The right cloud vendor can keep your databases continuously available.

-

Greater time-savings

With database admin tasks off their plate, who knows what your team can achieve?

-

Enterprise-grade scalability

When growth doesn’t add hardware or admin time, even small businesses can scale with less worry.

-

Bigger potential

Easily add new services like data warehouses, in-memory data stores, graph and time series databases, etc.

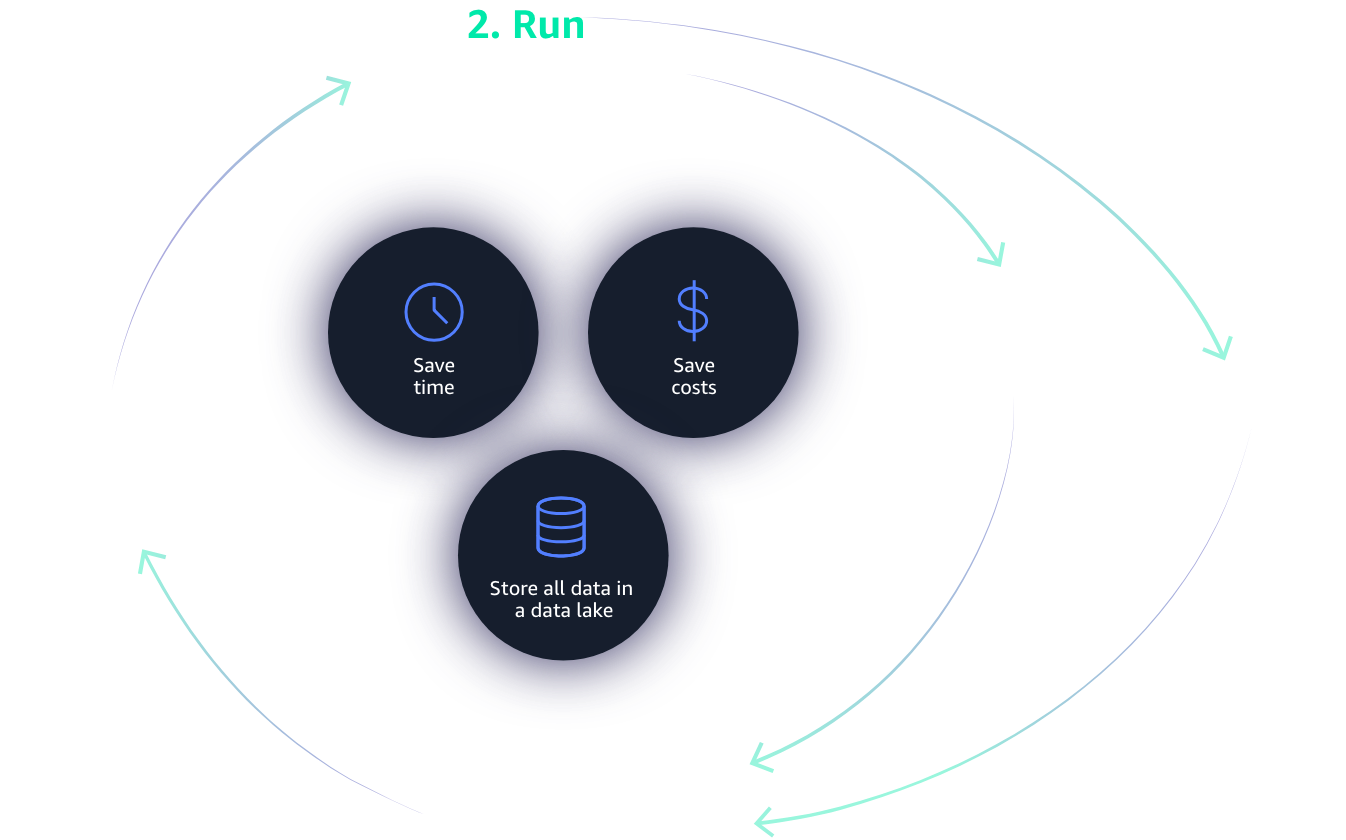

Store and retain all your data

When moving your data to the cloud, you no longer have to worry about deciding what data to keep and what to discard. With multiple low-cost, tiered storage options for hot and cold-tier data, you can keep all the data you need and analyze it at any time.

Explore success stories of managed databases and tiered storage

Autodesk increases database scalability and reduces replication lag

Autodesk switched to Amazon Aurora, a MySQL-compatible relational database, to increase database scalability and reduce replication lag.

FanDuel achieves almost 100 percent uptime

FanDuel moves critical workloads to AWS using Amazon Aurora, achieving almost 100 percent uptime.

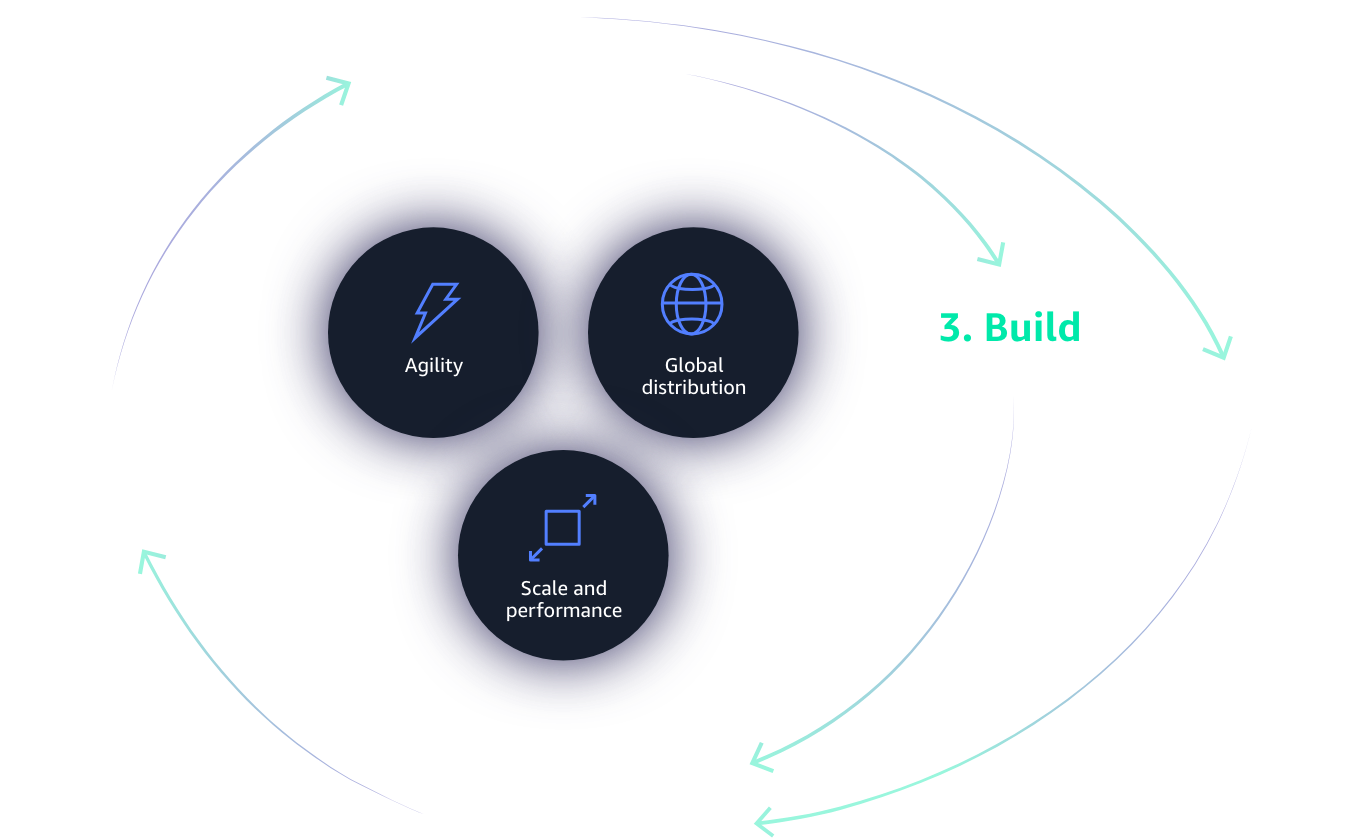

The rules for application design have changed

Modern application design must consider social, mobile, IoT, and global access. For today’s applications to deliver the best customer experience, they’ll need databases with massive storage capabilities, near real-time access speeds, and world-class scalability.

Requirements of applications today:

Data volume: TB–PB–EB

Locality: Global

Performance: Milliseconds-microseconds

Request Rate: Millions

Access: Web, Mobile, IoT, devices

Scale: Up-down, Out-In

Economics: Pay for what you use

Developer access: Instant API access

Store and retain all your data

-

Changing your database strategy

The one-size-fits-all approach of using relational databases for everything is no longer enough.

-

Breaking down complex apps

To ensure proper architecture and scalability, you need to examine every application component.

-

Building highly distributed applications

Break down your complex applications into microservices.

Which databases are best for your workloads?

The best tool for a job usually differs by use case. That’s why developers should be building highly distributed applications using a multitude of purpose-built databases. Explore advantages and use cases for the most common application workloads below.

In relational database management systems (RDBMS), data is stored in a tabular form of columns and rows, and data is queried using the Structured Query Language (SQL). Each column of a table represents an attribute, each row in a table represents a record, and each field in a table represents a data value. Relational databases are so popular because 1) SQL is easy to learn and use without needing to know the underlying schema and 2) database entries can be modified without specifying the entire body.

Advantages

-

Work well with structured data

-

Support ACID transactional consistency and support “joins”

-

Built-in data integrity

-

Data accuracy and consistency

-

Relationships in this system have constraints

-

Limitless indexing

Use Cases

-

ERP apps

-

CRM

-

Finance

-

Transactions

-

Data warehousing

A key-value database uses a simple key-value method to store data as a collection of key-value pairs in which the key serves as a unique identifier. Both keys and values can be anything, ranging from simple objects to complex compound objects. They are great for applications that need instant scale to meet growing or unpredictable workloads.

Advantages

-

Simple data format accelerates write and read

-

Value can be anything, including JSON, flexible schemas, etc.

Use Cases

-

Real-time bidding

-

Shopping cart

-

Product catalog

-

Customer preferences

In document databases, data is stored in JSON-like documents and JSON documents are first-class objects within the database. Documents are not a data type or a value; they are the key design point of the database. These databases make it easier for developers to store and query data by using the same document-model format developers use in their application code.

Advantages

-

Flexible, semi-structured, and hierarchical

-

Database evolves with application needs

-

Flexible schema

-

Representation of hierarchical and semi-structured data is easy

-

Powerful indexing for fast querying

-

Documents map naturally to object-oriented programming

-

Easier flow of data to persistent layer

-

Expressive query languages built for documents

-

Ad-hoc queries and aggregations across documents

Use Cases

-

Catalogs

-

Content management systems

-

User profiles / personalization

-

Mobile

With the rise of real-time applications, in-memory databases are growing in popularity. In-memory databases predominantly rely on main memory for data storage, management, and manipulation. In-memory has been popularized by open-source software for memory caching, which can speed up dynamic databases by caching data to reduce the number of times an external data source must be queried.

Advantages

-

Sub-millisecond latency

-

Can perform millions of operations per second

-

Significant performance gains—3-4X or more when compared to disk-based alternatives

-

Simpler instruction set

-

Support for rich command set

-

Works with any type of database, relational or non-relational, or even storage services

Use Cases

-

Caching (50%+ use cases are caching)

-

Session store

-

Leaderboards

-

Geospatial apps (like ride-hailing services)

-

Pub/sub

-

Real-time analytics

Graph databases are NoSQL databases that use a graph structure for sematic queries. Graph is essentially an index data structure; it never needs to load or touch unrelated data for a given query. In graph databases, data is stored in the form of nodes, edges, and properties.

Advantages

-

Ability to make frequent schema changes

-

Can manage huge, exploding volume of data

-

Real-time query response time

-

Superior performance for querying related data, big or small

-

Meets more intelligent data activation requirements

-

Explicit semantics for each query—no hidden assumptions

-

Flexible online schema environment

Use Cases

-

Fraud detection

-

Social networking

-

Recommendation engines

-

Knowledge graphs

Time series databases (TSDBs) are optimized for time-stamped or time series data. Time series data is very different from other data workloads, in that it typically arrives in time order form, the data is append-only, and queries are always over a time interval.

Advantages

-

Ideal for measurements or events that are tracked, monitored, and aggregated over time

-

High scalability for quickly accumulating time series data

-

Robust usability for many functions, such as data retention policies, continuous queries, and flexible-time aggregations

Use Cases

-

DevOps

-

Application monitoring

-

Industrial telemetry

-

IoT applications

Ledger databases provide a transparent, immutable, and cryptographically verifiable transaction log owned by a central, trusted authority. Many organizations build applications with ledger-like functionality because they want to maintain an accurate history of their applications' data.

Advantages

-

Maintain accurate history of application data

-

Immutable and transparent

-

Cryptographically verifiable

-

Highly scalable

Use Cases

-

Finance - Keep track of ledger data such as credits and debits

-

Manufacturing - Reconcile data between supply chain systems to track full manufacturing history

-

Insurance - Track claim transaction histories

-

HR and payroll - Track and maintain a record of employee details

Discover stories of cloud database success

Airbnb travels by cloud

Airbnb moved its main MySQL database to the cloud, discovering greater flexibility and responsiveness.

Watch the case study video »Duolingo learns fluent database

With its 31 million item cloud databases, Duolingo achieves 24,000 reads/second.

Watch the case study video »Data is growing exponentially

Data is a difficult beast to tame. It’s growing exponentially, coming in from new sources, becoming more diverse, and is increasingly harder to process. With all that in mind, how can your business possibly capture, store, and analyze its data at the near-real-time rate needed to remain competitive?

Static analytics solutions don’t work

-

Siloed

With data trapped in silos, seeing the big picture is nearly impossible.

-

Delayed

Analytics based on yesterday’s data arrive too late to gain competitive advantage.

-

Expensive

When analytics platforms cost more than the value of their insights, nobody wins.

-

Relational-only

To get ahead, you need to analyze all your data—relational and non-relational, alike.

-

Cumbersome

What good is an analytics platform if no one can use it?

-

Limited to specialists

Making more data-driven decisions means democratizing analytics.

Improve your analytics with data lakes

Data lakes allow you to collect and store any data, in its original format, in one centralized repository. They provide optimum scale, flexibility, durability, and availability. But best of all, they make performing analytics on all your data faster and produce smarter insights.

Data lakes optimize many types of analytics

From retrospective analysis and reporting to here-and-now real-time processing to predictions, data lakes are the ideal choice for any type of analytics. The most common cloud-based analytics types include:

-

Operational analytics

Analytics focused on improving existing operations, commonly used for resource planning, system operations, equipment failures.

-

Dashboard visualizations

Reports, dashboards, and interactive charts providing at-a-glance visibility into financial, marketing, sales data, and more.

-

Quick interactive queries

Find-and-filter static data to return sales reporting, marketing programs, inventory status, and other results without analysis.

-

Big-data processing

Processing of huge, complex, and varied data-sets to leverage clickstream and log analytics or social sentiment.

-

Real-time streaming

Analyzes streaming data in transit before it is stored to enable credit-scoring, fraud detection, cybersecurity, and beyond.

-

Smart recommendations

Machine-learning algorithms analyze data for patterns and trends, enabling capabilities such as next-best-offer and human networking.

-

Predictive analytics

Data analysis and modeling capabilities that predict future events and deliver “propensity to buy” and “next likely action” insights.

Build a solid case for data lakes

-

More storage, less cost

Tiered storage across cloud databases, data warehouses, and data lakes lets you store more for less.

-

Break down silos

Centralize all your data into an efficient, easily accessible data lake.

-

Analyze all your data

With all your data in one place, you can generate richer, more accurate analytics.

-

Expand data types

Store both structured and unstructured data in any format.

-

Durable

Amazon S3 is designed for eleven nines of data durability because it automatically creates and stores your data across multiple systems.

Explore analytics use cases brought to life by real customers

Redfin sells faster using massive data stores

Data lakes allow Redfin to cost effectively innovate and manage data on hundreds of millions of properties.

Watch the video »Nasdaq finds big savings with big data

Nasdaq optimized performance, availability, and security while loading seven billion rows of data per day.

Watch the video »Is ML more than hype?

Cloud computing and machine learning have become accessible to all businesses—no longer just large tech firms and academic research institutions. Cloud has removed the barriers to experimenting and innovating with ML so that even risk-adverse businesses are making it part of their strategies. We have reached a tipping point, where the recent hype for these technologies is transitioning to real business impact. It can be hard to know how to get started with machine learning, so here are a few factors to consider.

Make machine learning the centerpiece of your digital transformation

-

Start with data strategy

Effective ML requires clean, accessible data—so be sure to have your data-house in order before you begin.

-

Identify the business problem

Find your best use-cases by considering how ML can delight your customers, increase efficiency and productivity, and drive innovation.

-

Try a machine-learning pilot

Evaluate possible pilot projects focused on your priority use cases, then determine what tools, skills, and budget are needed.

Start innovating with ML

There are many uses for ML, but in this context, we want you to think about it as a driver of innovation. As you input analytics into the right ML system, it can provide recommendations and/or automatically apply insights that improve your processes. This can give your Data Flywheel the big organic push it needs to become self-sustaining.

Blockchain without the hype

Blockchain and, more broadly, ledger solutions are just scratching the surface of their capabilities, offering substantial value for a number of diverse industries. These blockchain networks and ledger applications are being used today to solve two types of business needs: tracking and verifying transactions with centralized ownership and executing transactions and contracts with decentralized ownership.

The real needs for blockchain

Ledgers with centralized trust

Creating a ledger, owned by a single, centralized entity—in which the ledger serves as a transparent, immutable, and cryptographically verifiable transactional log—is a need that spans industries. Yet, building a scalable ledger isn’t easy: traditional approaches are resource intensive, difficult to manage and scale, error prone and incomplete, and impossible to verify. The solution is not blockchain, but a centralized ledger. With Amazon QLDB, you can put this use case to work with a purpose-built ledger database the transparency, security, immutability, and speed it takes for enterprise use.

Transactions with decentralized trust

Blockchain is enticing when you need to perform transactions quickly across multiple entities, without the need for a trusted, central authority. However, existing frameworks are difficult to set-up, hard to scale, complicated to manage, and expensive to operate. Each member in the blockchain network must manually provision hardware, install software, create and manage certificates for access control, and configure networking components. Once the blockchain network is running, users have to continuously monitor the infrastructure and adapt to changes. Amazon Managed Blockchain can help you overcome these challenges and start leveraging blockchain that scales as usage grows, improves reliability, and hardens durability.

Explore real life uses of blockchain and machine learning

T-Mobile uses machine learning to improve personal connections

T-mobile is serving their customers better and faster by predicting their needs in real-time using machine learning on AWS

Singapore Exchange transacts with blockchain

Using Amazon’s blockchain technology, Singapore Exchange was able to identify new ways of trading financial assets across its global network